Identity and Access Management

Elevate Your IAM Effectiveness

Let SDG Services Elevate Your IAM Effectiveness and Save Effort, Cost, and Your Assets.

From the increasing threats posed by AI to improvements in behavior analytics, the identity and access landscape is changing quickly. Our 20+ years of experience in identity and access management has helped us guide some of the largest and most diverse enterprises to success.

How SDG’S IAM SERVICES BENEFIT YOU:

- Streamlined identity and access

management controls - Vendor agnostic solutions

- Enhanced security and compliance posture

- Customer centric frictionless identity

- Existing investments are maximized

- Modern and sustainable identity programs

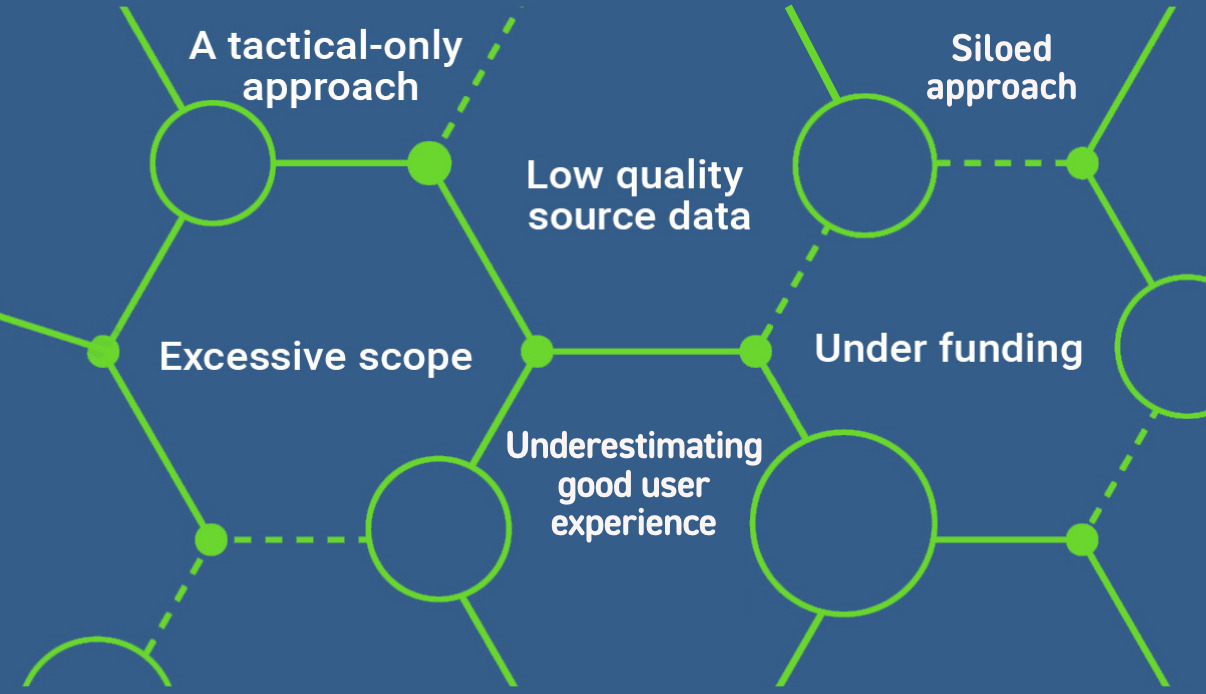

An Unfortunate Number Of IAM Projects Fail Due To . . .

Siloed

Approach

Underestimating

Good User

Experience

Low Quality

Source Data

A Tactical-Only

Approach

Under Funding

Excessive Scope

This is when SDG’s 20+ years of IAM service experience matters.

There’s more to IAM than which technology platform. With over 20 years of experience, we know the path to success is a thorough understanding of the people, processes, and technology and how they work together to be successful.

An Unfortunate Number Of IAM Projects Fail Due To . . .

. . . this is when SDG’s 20+ years of IAM service experience matters.

There’s more to IAM than which technology platform. With over 20 years of experience, we know the path to success is a thorough understanding of the people, processes, and technology and how they work together to be successful.

Trusted by The World’s Best Organizations